Meta reveals how Make-A-Video clip produces AI art GIFs

[ad_1]

Meta reveals how Make-A-Video clip produces AI art GIFs

[ad_1]

To day, the time period “AI art” has intended “static images.” No for a longer time. Meta is demonstrating off Make-A-Movie, in which the firm is combining AI art and interpolation to build shorter, looping movie GIFs.

Make-A-Movie.studio isn’t obtainable to the community, but. Instead, it’s becoming proven as what Meta by itself can do with the engineering. And certainly, whilst this is technically video—in the sense that there’s a lot more than a several frames of AI artwork strung together—it’s continue to possibly closer to a common GIF than anything else.

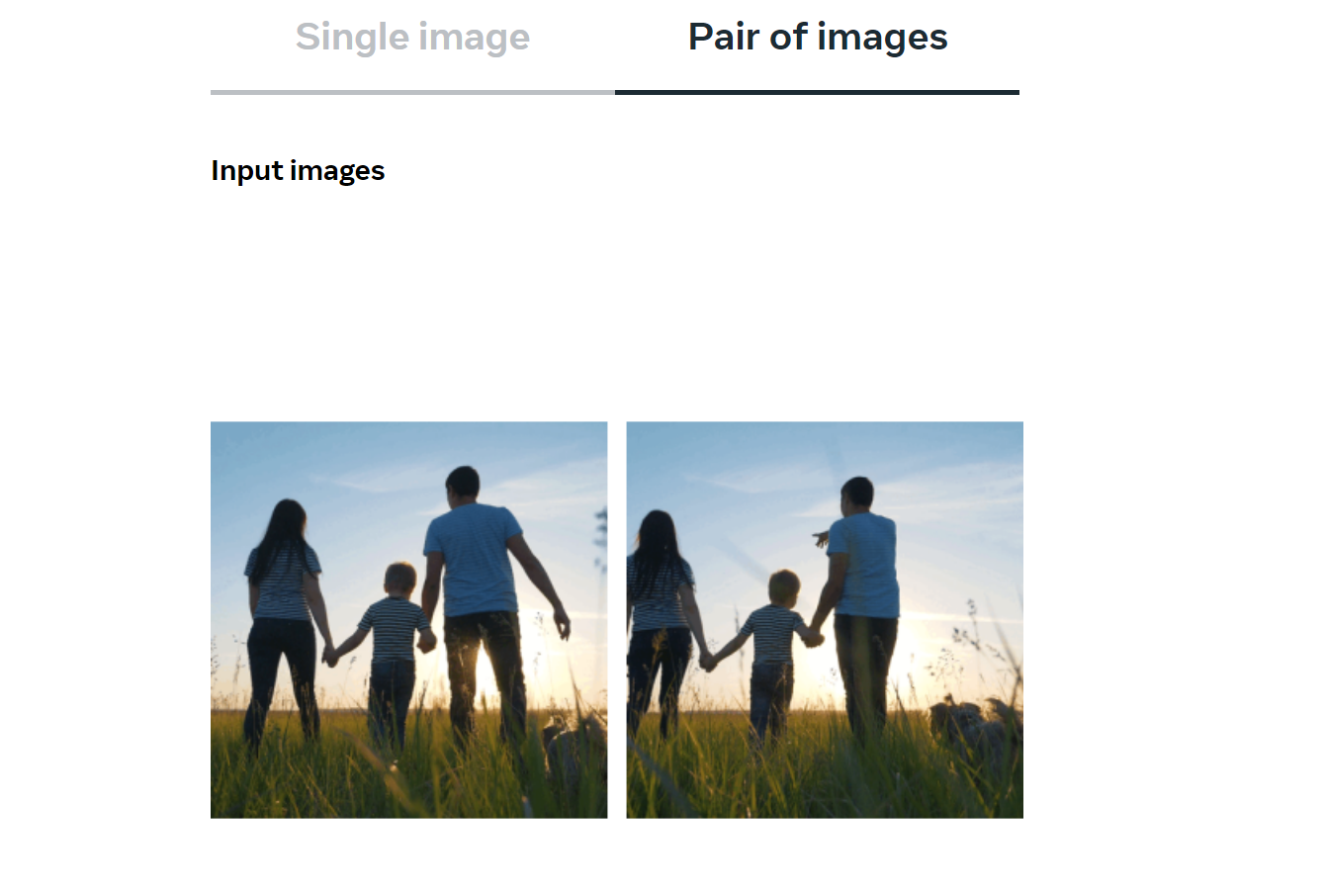

No issue. What Make-A-Online video accomplishes is three-fold, specified the demonstration on Meta’s web site. First, the technologies can take two related images—whether they are of a water droplet in flight, or pics of a horse in a whole gallop—and make the intervening frames. Extra impressively, Make-A-Video seems to be ready to take a however graphic and use movement to it in an intelligent fashion, getting a still impression of a boat, for illustration, and developing a brief video of it transferring across the waves.

Last but not least, Make-A-Movie can put it all collectively. From a prompt, “a teddy bear painting a portrait,” Meta showed off a modest GIF of an animated teddy bear portray by itself. That reveals not only the capacity to produce AI artwork, but also to infer action from it, as the company’s study paper implies.

“Make-A-Movie investigation builds on the the latest progress made in textual content-to-graphic generation know-how built to allow text-to-movie generation,” Meta explains. “The procedure makes use of photos with descriptions to master what the world seems like and how it is usually described. It also takes advantage of unlabeled videos to study how the planet moves. With this info, Make-A-Online video allows you bring your creativity to life by producing whimsical, a person-of-a-type video clips with just a few words and phrases or lines of text.”

That most likely suggests that Meta is schooling the algorithm on real movie that it’s captured. What is not very clear is how that video is being inputted. Facebook’s research paper on the topic does not indicate how video could be sourced in the foreseeable future, and a person has to question whether or not anonymized video captured from Facebook could be employed as the seed for foreseeable future art.

This is not entirely new, at least conceptually. Animations like VQGAN+clip Turbo can just take a textual content prompt and convert it into an animated video clip, but Meta’s operate appears more refined. It is challenging to say, although, right up until the design is launched for an audience to engage in with.

Yet, this can take AI artwork into a different dimension: that of motion. How lengthy will it be before Midjourney and Stable Diffusion will do the very same on your Pc?

[ad_2]

0 comments:

Post a Comment