What is Sustainable Computing?

[ad_1]

What is Sustainable Computing?

[ad_1]

When it comes to the topic of greenhouse gas emissions, the traditional culprits usually fall under the umbrella categories of transportation, fossil fuel, agriculture, or "electricity." But if we dig a little deeper, we find that many of these industries are propped up by the impressive technology sector of the past few decades. And just like Moore's law before it, the technology sector's carbon footprint is increasing at a blistering pace.

For example, the electricity consumption of data centers globally is estimated to exceed 205 terawatt hours per year. This number is more than the annual electricity consumption of countries such as Ireland, Denmark, Taiwan, or South Africa. Furthermore, at the moment only 61% of the world population is online, and modest projections show that by 2030, more than 43 billion IoT devices will be tapped into the ever-interconnected market sphere.

At such a massive scale, it is projected that information and communications technology (ICT) will account for 7% to 20% of the global demand for energy consumption by 2030.

Using renewable energy is just one part of the complex, multi-faceted equation for carbon-neutrality, and chasing the elusive environmental footprint of carbon has led to some counter-intuitive observations in energy utilization.

As computing becomes more and more ubiquitous, we seek to shed some light on how building sustainable computing systems is not only the conscientious thing to do, but also how it can lead to better efficiency for both computing systems and the planet.

In this article, we break down some of the biggest contributors to the carbon footprint within the computing industry, and describe how modern techniques aimed at curbing carbon emissions may actually result in a seismic shift in how we develop the hardware of the future. Buckle up, since this will be quite the ride…

Why is this relevant now?

Recent trends have put the computing industry in the spotlight for carbon emissions, and not in a good way. The prevalent business model across tech companies, which encourages throwing out "old" tech as "new" tech is released. This constant churning of technology, such as mobile phone upgrades, creates an increasing stockpile of "waste" which is very difficult to recycle, let alone resell in an alternative market.

Cryptocurrency mining has taken the world by a storm over the past decade. The proof-of-work model in the blockchain requires massive energy consumption distributed across many machines, and has been one of the biggest detractors of the technology in the public sphere.

Then you also have the "cloud" (i.e., data centers), which power nearly all our everyday services and keeps us interconnected. These behemoths need to continue scaling to service all our current and future needs, from personal usage such as photo storage or TV binging, to industrial-scale services such as managing airline traffic and running governments.

These activities and services are operational 24/7, and any downtime would be a considerable loss of quality of life for many. Of course, this also incurs a huge carbon tax on our planet.

While power efficiency has always been a primary consideration when designing new hardware (as highlighted by our CPU and GPU reviews here on VFAB), it only considers one aspect of the equation. Designing for a sustainable computing environment requires a holistic look at the environmental impact of technology during its lifetime, from chip manufacturing at the production stage, all the way to the recycling of semiconductors at the end-of-life phase.

As an added incentive, government funding is now being directed to this effort, too. The US National Science Foundation (NSF) recently put out a call for research proposals explicitly highlighting the need for sustainable computing efforts. And thus, where the money leads, innovation (usually) follows.

What's the source?

It would be easy to simply point the finger at cryptocurrency and data center companies as the offenders for the increase of carbon emissions in the ICT sector. Cryptocurrency miners and data centers, however, only constitute a portion of the operational carbon emissions based on use. Carbon emissions from production, manufacturing, transport, and end-of-life processing actually overshadow the operational carbon footprint substantially.

This is what Harvard researchers recently uncovered, by poring over publicly available corporate reportings on carbon emissions from companies including AMD, Apple, Google, Huawei, Intel, Microsoft, TSMC, and others.

The results show that the carbon footprint today is overwhelmingly capex-related (from one-time infrastructure and hardware cost), rather than opex-incurred (recurring operations).

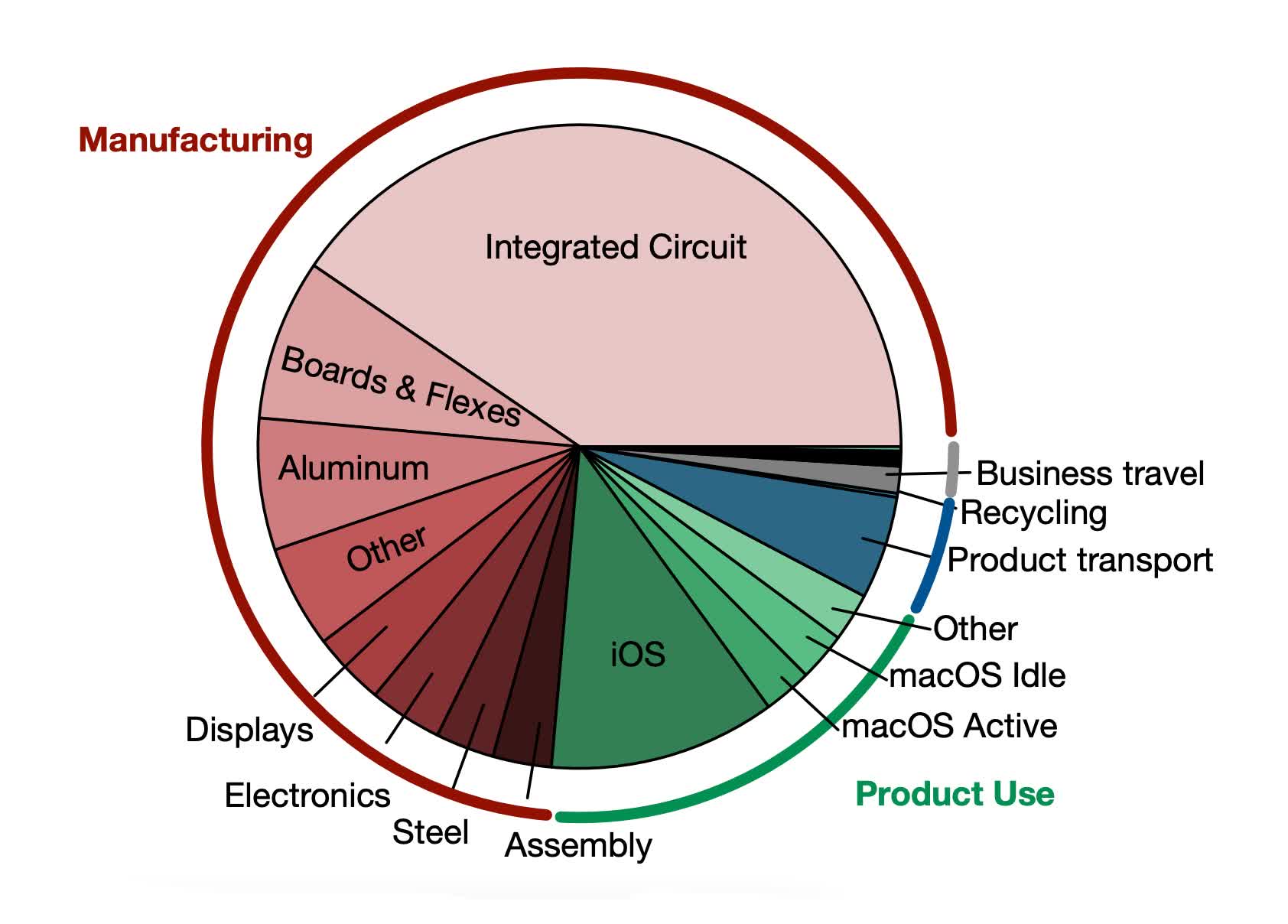

For example, Apple's carbon breakdown in 2019 was 74% due to manufacturing cost, while the hardware use accounted for only 19%. Integrated circuit manufacturing was overwhelmingly the major producer of carbon emissions (33%), highlighting the need to innovate on the production side for sustainable computing.

Fuel combustion in the form of diesel, natural gas, and gasoline form just a small fraction of the direct emissions in operational cost of data centers. On the other hand though, they form a large fraction of the chip manufacturing carbon footprint: over 63% of the emissions from manufacturing 12-inch wafers at TSMC, for example.

Breaking down the lifetime of a computer system into four distinct phases helps shed more light on the possible sources of carbon emissions. These include:

- Production: a capex source; involving material procurement, IC design, packaging, and assembly

- Product Transportation: a capex source; moving a product or hardware to its point-of-use

- Product Use: an opex source; utilization of the hardware during its lifetime, including both static and dynamic power consumption

- End-of-Life: a capex source; recycling and end-of-life carbon emissions, potentially for reuse in future systems.

Although the manufacturing-side carbon footprint forms a substantial portion of total carbon emissions, always-on devices (such as gaming consoles or smart devices) incur a larger opex cost than capex. Thus, optimizing for power-efficiency is still an important design constraint, but innovative measures still need to be taken to tackle the non-operational cost of production, transportation, and recycling.

[embed]

There seems to be a tension between designing new hardware for better performance, and designing hardware in a sustainable manner. However, this does not need to be the case: the computing industry has benefited for so long by riding Moore's law into the sunset. Many optimizations across computing generations were a result of technology scaling.

Many optimizations across computing generations were a result of technology scaling.

Now, there is no longer a free lunch, but many opportunities still do exist by rethinking "traditional" architectural techniques from the angle of sustainability and reliability. Tackling both capex and opex costs is important for the future of sustainable computing.

Let's take a deep dive into one of the fastest growing industries in recent years: artificial intelligence. The many achievements in the past decade alone for AI have been extraordinary!

From record-time vaccine development, to autonomous vehicle innovations, to DLSS 2.0 in visual rendering... AI is only just beginning to transform our lives and societies.

But before getting carried away with the immense progress in technology, let's take a step back and explore the environmental impact of AI on our planet. We are not here to point fingers, but rather, introduce a new mindset for future technology development that is sustainability-conscious.

Life cycle of an AI system

AI models are growing super-linearly across multiple fronts. The rise of big data means that we are now operating on exabyte scale data generation and storage. Next, AI models are increasing dramatically in size: Google recently released DALL-E 2, a 5 billion parameter model generating images based on natural language text. Then of course, you have all the hardware infrastructure needed to power the AI revolution in the cloud and research labs.

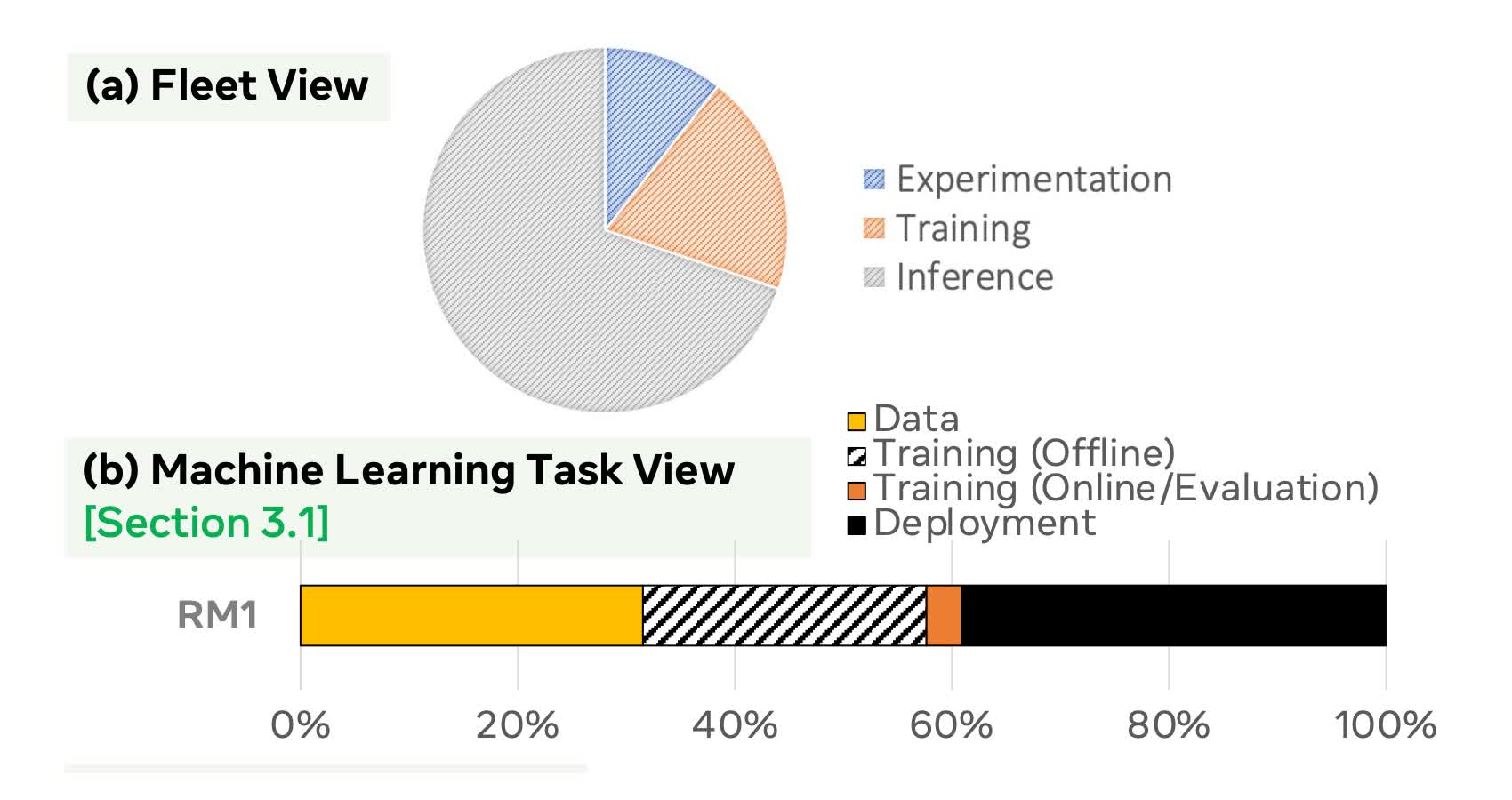

Focusing on the application side, the lifecycle for the development and deployment of an AI system is roughly as follows:

- Data processing

- Experimentation

- Training

- Inference

Data processing involves gathering and cleaning up data, as well as extracting features of relevance for developing a machine model. The experimentation phase includes developing and evaluating proposed algorithms, model architecture designs, hyperparameter tuning, and various other computationally expensive techniques. Given the large space of exploration, it is not uncommon to perform many explorations in parallel at scale, on both CPUs and GPUs in the cloud.

After experimentation, a promising training routine is developed, which requires extensive amounts of data, covering many cases and scenarios. Training a model itself is another compute intensive task, and may sometimes require additional fine-tuning or retraining as more recent data becomes available.

Finally, a model is deployed, and used in inference mode. This step involves making predictions dynamically, for example providing recommendations for what to watch on Netflix, or to autocomplete search results in a search engine.

While each individual inference may be fast and (potentially) low-compute, the scale at which inference is performed make it a force to be reckoned with. Deployed models are expected to produce trillions of predictions daily, and serve billions of devices across the globe.

Each phase is important, and you cannot dismiss any phase when targeting sustainability. A universal language model for text translation used by Meta, for example, shows a breakdown of 31%, 29%, and 40% for data processing, experimentation/training, and inference, respectively.

Analyzing the effect of AI on carbon emissions primarily involves the manufacturing and use of the system. As described above, the use of an AI system is substantial across all phases of the life cycle. The manufacturing side includes building the infrastructure for AI systems.

Facebook's sustainability report indicates that the manufacturing impact is more than 50%. This means that there is always an upfront cost for the design of AI models, before they are even designed and deployed.

How is this architecturally relevant? Well, system designers now have a new knob and metric to tune for: sustainability!

General purpose GPU acceleration can provide an order of magnitude energy efficiency improvement over CPU-based compute. Designing even more specialized AI accelerators can further improve (both) performance and sustainability efforts.

Understanding cache-level effects on these systems can also help optimize the carbon footprint of AI systems. What tasks are used frequently and need to be stored for quick access? Is it cheaper to recompute an operation, or store it and read it from memory? What type of devices (such as DRAM or flash) are optimal for these systems, to balance both performance and reduce the carbon footprint?

... while the primary metric has traditionally been performance-centric, elevating sustainability as a first-class design constraint presents even more room for innovation with a long-term mindset on the impact of technology on our planet.

There are also fundamental computing questions that need to be revisited. For example, halving the precision of numbers (from 32 bits to 16 bit operations) can more than double the energy efficiency, with minimal impact on an ML model's accuracy. Perhaps even questioning the digital representation of numbers is also warranted, with a move away from IEEE 754 floating point to more energy-conscious yet just-as-performant number formats.

Many of these interesting architectural questions are already at the forefront of new hardware design given the rise of AI this past decade. However, while the primary metric has traditionally been performance-centric, elevating sustainability as a first-class design constraint presents even more room for innovation with a long-term mindset on the impact of technology on our planet.

The data center effect

One method that various corporations are taking to offset their carbon footprint is to target "net-zero" carbon emissions. The goal is to effectively produce zero carbon emissions for all their operations, either through the use of renewable sources, cutting down on emissions, or "buying" carbon credits.

[embed]

Let's ignore the "buying carbon credit" option for now, as that is typically a public relation stunt and less technologically interesting. So how can data centers and large corporations then offset their carbon usage, while still sustaining a profitable business?

The answer (as is the objective of this article) is to optimize for sustainable computing!

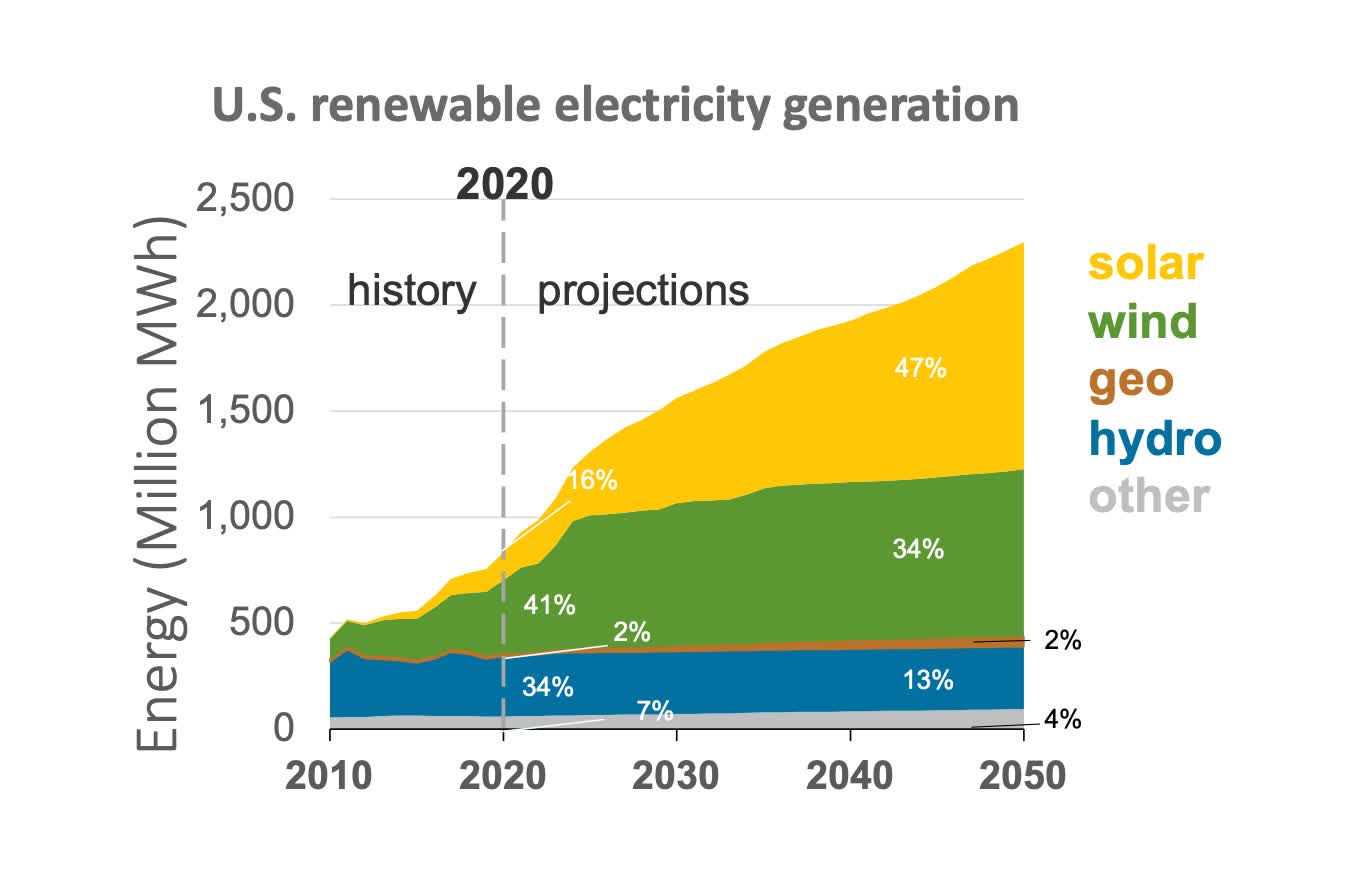

Meta has actually been at the forefront of this effort. A recent paper describes how Meta is exploring how renewable energy sources, such as solar or wind, can be used to power their data centers in an optimized fashion.

For example, solar energy sources provide a cyclical source of power, producing thousands of megawatt hours during the daytime, and then minimal energy in the evenings. Interestingly, this is generally very similar to the utilization of data centers, too, where working hours require way more resources during the day than during the evenings. By employing carbon-aware scheduling of their data center resources, these can leverage renewable energy during the day time where applicable, and then switch to alternative sources in non-peak hours.

This approach is primarily focused on operational cost though, rather than upfront manufacturing cost. To design the data centers of tomorrow, it is important to factor in the upfront costs and model it appropriately. Only then can corporations like Meta decide where to spend their resources: is it better to focus manufacturing efforts on batteries versus sustain carbon-aware scheduling during the lifetime of the hardware? This multifaceted equation is not trivial, and companies are now starting to look into designing such frameworks to navigate this new frontier.

The good news is that designing for sustainability and reliability will actually be more beneficial in the long run for companies, and positively affect their bottom lines.

The "upfront" cost of understanding how to balance and address carbon will certainly pay dividends for corporations and, more importantly, the planet we all share.

Computing for sustainability

In addition to managing renewable energy sources, there are many other approaches towards sustainable computing that are also promising.

Designing reliable hardware and addressing hardware failures is its own line of research these days. Both Google and Meta have noticed an interesting recent phenomenon where hardware failures have become extremely common.

Hardware errors, which were presumed to be a one-in-a-million scenario, have recently been profiled and shown to be a one-in-a-thousand phenomenon. Understanding the source of these errors and how to mitigate them will help sustain their utilization longer, prolonging their use and amortizing their upfront carbon footprint.

[embed]

Intermittent computing is another promising development, where devices turn on when needed, and turn off when not needed. Power gating is not a new phenomenon, but intermittent computing has many unique challenges such as determining when to turn devices on/off, how to minimize the time for flipping the switch, and of course, which domains can benefit from such intermittent computing usage.

Last but not least, how can we properly recycle our hardware once they are no longer useful?

This is an issue that should be addressed early on in the device development process. For example, is it possible to use organic material to build next-gen hardware, to enable straightforward recycling at the end of their lifetime?

Or perhaps can the market pivot to "recycle" older high-end hardware into low-end markets in a directed fashion? Perhaps the server chips of today can become the IoT compute of tomorrow, by properly managing the power utilization and building the chip in a reconfigurable way. These ideas are starting to appear in smaller circles and research labs, but until the larger corporations decide to accelerate this development, these ideas might not fully take off.

Where do we go from here?

Designing the next generation of systems and hardware in a carbon-aware fashion is an exciting and important upcoming phase in the tech industry.

Regardless of the motivation corporations may have to offset their carbon footprint, there are quite a bit of technical challenges involved for properly managing carbon. This venture requires understanding the end-to-end lifetime carbon footprint of new and existing systems being built, and awareness for modern and upcoming applications (such as AI and edge computing).

Many fundamental design decisions may need to be revisited and incorporated in future system designs. Including carbon "nutrition fact" labels which will soon become a default of many spec sheets, and businesses will have to incorporate these in many business decisions. Holding big and small corporations alike accountable is important for our planet, and it all begins with understanding the carbon impact of modern technology and systems.

More Tech Explainers

[ad_2]

0 comments:

Post a Comment